Serverless file transfers between S3 and a remote SFTP server using AWS Transfer Family SFTP Connectors

Introduction

In a previous blog post, I covered how AWS Transfer Family can be used to SFTP upload files from a server to Amazon S3. This involved the creation of an AWS Transfer Family server instance that is configured to use the SFTP protocol. An SFTP client could then initiate the SFTP connection to the AWS Transfer Family server instance to transfer files to and from an S3 bucket.

In July 2023, AWS announced the launch of SFTP connectors in the AWS Transfer Family service. With this launch, it is now possible to initiate the SFTP connection from the "AWS side" to a remote SFTP server. Using the SFTP Connector, you can transfer files between S3 and a remote SFTP server without the need for an AWS Transfer Family server instance. This opens up several potential options to automate SFTP transfer jobs by using other AWS services such as EventBridge and Lambda.

In this blog post, we will have a look at using AWS Transfer Family SFTP Connector to transfer files to and from an S3 bucket. We will also automate the transfer of files placed in an S3 bucket to a remote SFTP server.

Exploring the AWS Transfer Family SFTP Connector

An SFTP Connector is a resource under the AWS Transfer Family that allows you to transfer files to and from an S3 bucket and a remote SFTP server. The SFTP Connector does not require the use of any AWS Transfer Family server instance.

AWS Secrets Manager is used to store the SFTP user credentials. The credentials can be a username, password and/or private key.

An IAM Role is attached to the SFTP Connector to provide access permissions to the files/objects in the S3 Bucket and SFTP user credentials in AWS Secrets Manager.

The SFTP Connector has an optional configuration to log transfer events to CloudWatch Logs.

The remote SFTP server must be publicly accessible for usage with the SFTP Connector.

At the time of writing, the SFTP Connector only supports public connection to a remote SFTP server. Unfortunately, it is not possible to set a source static IP address on the SFTP Connector, thus it is not possible to set up IP whitelist rules on your remote SFTP server. The AWS FAQs state to contact AWS support if you have a use case that relies on SFTP connectors with static IP addresses.

SFTP Connectors is a new addition to the AWS Transfer Family service. AWS may add more features and functionality to this service if there is growing customer demand.

Creation of the SFTP Connector with logging to CloudWatch Logs

AWS documentation provides instructions on how to create the SFTP Connector along with the required IAM role and Secrets Manager secret. This is available here: https://docs.aws.amazon.com/transfer/latest/userguide/configure-sftp-connector.html

For the SFTP Connector to log the file transfer events, an additional Logging IAM role is required. Below is an example trust policy and permissions policy for the Logging role to write logs to CloudWatch logs.

Example Logging role trust policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AWSTransferFamily",

"Effect": "Allow",

"Principal": {

"Service": "transfer.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Example Logging role permissions policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "CloudWatchLogs",

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:CreateLogGroup",

"logs:PutLogEvents"

],

"Resource": [

"*"

]

}

]

}

You can attach the Logging IAM role while creating the SFTP Connector or after it has been created.

The below screenshot shows the Edit connector configuration for an example SFTP Connector. Here, you can specify the URL of the remote SFTP server. You can also specify the Access role (to S3 and AWS Secrets Manager) and an optional Logging role.

Using the SFTP Connector with the AWS CLI

The AWS CLI can be used to transfer files using the SFTP Connector. The command to do this is aws transfer start-file-transfer.

This command can be used to transfer up to 10 files at a time using the SFTP Connector. After executing the command, a unique ID for the file transfer event will be provided. This ID can then be looked up in the SFTP Connector CloudWatch Logs for the transfer outcome status.

It is important to note that using the AWS CLI (or AWS API call) to transfer files using the SFTP Connector does not provide the same functionality as transferring files with SFTP commands. One of the major key differences between them is described as follows.

When transferring files with the SFTP Connector, the specific source file name and location must be known and provided. In other words, when using the SFTP Connector:

You can not transfer the files in a directory by specifying just the source directory.

- You can however specify the destination directory.

You can not use wildcard characters (

*) to match and transfer multiple files.

Sending files from S3 to a remote SFTP server (Outbound transfers)

To send files from an S3 Bucket to a remote SFTP server, the following AWS CLI command can be used:

aws transfer start-file-transfer \

--connector-id <CONNECTOR_ID>

--send-file-paths <SOURCE_S3_OBJECT_PATH> \

--remote-directory-path <DESTINATION_SFTP_SERVER_DIRECTORY>

The placeholder argument values are described as follows:

<CONNECTOR_ID>- AWS Transfer Family SFTP Connector ID<SOURCE_S3_OBJECT_PATH>- Source S3 Object path including the S3 Bucket name and S3 Object key, starting with a forward slash "/".<DESTINATION_SFTP_SERVER_DIRECTORY>- Destination remote SFTP server directory path. This can be an absolute directory path (starting with a forward slash "/") or a relative directory path from the SFTP user's home directory.

Example: AWS CLI command and diagram to send files from S3 to a remote SFTP server

$ aws transfer start-file-transfer \

--connector-id c-1234567890abcdef \

--send-file-paths /freddy-test-aws-transfer/out/test1.txt \

--remote-directory-path /home/aws-transfer

{

"TransferId": "4b323a4d-f5ec-4a60-ab64-5676646fa622"

}

Retrieving files from a remote SFTP server to S3 (Inbound transfers)

To retrieve files from a remote SFTP server delivered to an S3 Bucket, the following AWS CLI command can be used:

aws transfer start-file-transfer \

--connector-id <CONNECTOR_ID>

--retrieve-file-paths <SOURCE_SFTP_SERVER_FILE_PATH> \

--local-directory-path <DESTINATION_S3_DIRECTORY>

The placeholder argument values are described as follows:

<CONNECTOR_ID>- AWS Transfer Family SFTP Connector ID.<SOURCE_SFTP_SERVER_FILE_PATH>- Source remote SFTP server file path. This can be an absolute file path (starting with a forward slash "/") or a relative file path from the SFTP user's home directory.<DESTINATION_S3_DIRECTORY>- Destination S3 location including the S3 Bucket name and optional S3 Object prefix (directory), starting with a forward slash "/".

Example: AWS CLI command and diagram to retrieve files from remote SFTP server to S3

$ aws transfer start-file-transfer \

--connector-id c-1234567890abcdef \

--retrieve-file-paths /home/aws-transfer/test2.txt \

--local-directory-path /freddy-test-aws-transfer/in

{

"TransferId": "afb6ee7e-230e-437e-807c-7d9f5ed070f1"

}

SFTP Connector Logs

The SFTP Connector transfer events are logged to CloudWatch Logs if the SFTP Connector has been configured with a Logging IAM role. Logs are written to a log group with the following name convention:

/aws/transfer/<CONNECTOR_ID>

The AWS CLI command (aws transfer start-file-transfer) to perform the transfer with the SFTP Connector will output a transfer-id that will be present in the logged transfer events.

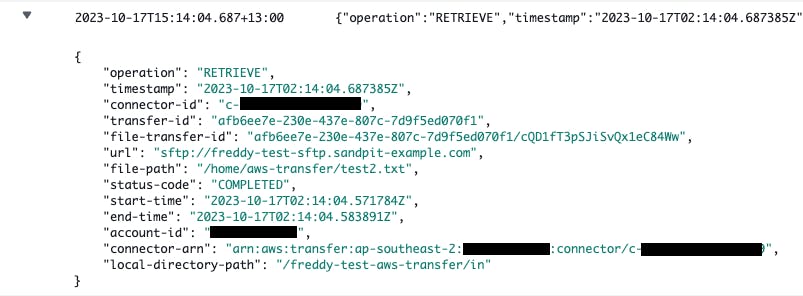

Below are examples of the logged transfer event from the example AWS CLI commands (Using the SFTP connector with the AWS CLI).

Example: Logged outbound transfer event

Example: Logged inbound transfer event

Automate transfer of files to a remote SFTP server from a S3 Bucket

In this section, I will provide a solution to automate the uploading of files placed in a S3 Bucket to a remote SFTP server. This solution uses S3 Bucket Notifications with Amazon EventBridge to capture S3 object creation events. The target of these events is a Lambda function that uses the SFTP Connector to upload the newly created S3 object.

This described solution assumes the following:

You have created a working SFTP connector for an existing remote SFTP server (see Creation of the SFTP connector with logging to CloudWatch Logs).

The SFTP connector has IAM permission to access an existing S3 Bucket.

Create a Lambda Function to transfer files using the SFTP Connector

First, we create an IAM role for a Lambda Function with permission to use StartFileTransfer using the SFTP connector.

Lambda Function IAM role trust policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Lambda Function IAM role permissions policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "CloudWatchLogging",

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

},

{

"Sid": "StartSFTPConnectorTransfer",

"Effect": "Allow",

"Action": [

"transfer:StartFileTransfer"

],

"Resource": [

"arn:aws:transfer:<REGION>:<ACCOUNT_ID>:connector/<CONNECTOR_ID>"

]

}

]

}

We will create a Lambda Function using Python and the AWS SDK Boto3.

At the time of writing, the latest Python runtime available is Python 3.11 using Boto3 1.27.1. To support using transfer API with SFTP connectors, we need to use Boto3 1.28.11 or later. We will thus need to bundle this version of the Boto3 package or newer with our Lambda function .zip file.

In an empty working directory, create the main Lambda Function Python file called lambda_function.py with the following content:

import json

import os

import boto3

client = boto3.client("transfer")

CONNECTOR_ID = os.environ["CONNECTOR_ID"]

REMOTE_DIRECTORY_PATH = os.environ["REMOTE_DIRECTORY_PATH"]

def lambda_handler(event, context):

bucket_name = event["detail"]["bucket"]["name"]

object_key = event["detail"]["object"]["key"]

send_file_path = f"/{bucket_name}/{object_key}"

print(f"SFTP transfer S3 '{send_file_path}' to SFTP server path '{REMOTE_DIRECTORY_PATH}'")

response = client.start_file_transfer(

ConnectorId=CONNECTOR_ID,

SendFilePaths=[send_file_path],

RemoteDirectoryPath=REMOTE_DIRECTORY_PATH

)

print(response)

Install the latest version of Boto3 to the working directory.

pip install boto3 -t .

Your working directory should now have lambda_function.py and the Boto3 package files:

├── __pycache__

├── bin

├── boto3

...

├── lambda_function.py

...

Create the Lambda Function zip archive file from the contents of your working directory:

# Run from in you working directory

zip -r lambda-function.zip .

In the AWS Lambda Console choose Create function.

Select Author from scratch.

Provide a name for the function.

Under Runtime, select Python 3.11.

Under Permissions > Change default execution role > Execution role, select Use an existing role and choose the IAM role you created (this example uses the IAM role name "freddy-test-lambda-aws-transfer-sftp-connector").

Choose Create function.

Under Code choose Upload from .zip file. Upload the lambda-function.zip you had created previously.

Under Configuration > Envrionment variables. Set the following environment variables:

CONNECTOR_ID - Your SFTP Connector ID.

REMOTE_DIRECTORY_PATH - Your remote SFTP server directory path to upload files to.

Set up S3 Bucket Notifications with Amazon EventBridge

We will now configure S3 Bucket Notifications using Amazon EventBridge to invoke the Lambda Function when new files objects are created in the bucket.

In the AWS S3 Console, navigate to your existing S3 bucket.

Under the S3 bucket Properties > Event notifications, under Amazon EventBridge choose edit.

Under Send notifications to Amazon EventBridge for all events in this bucket, choose On then choose Save changes.

In the Amazon EventBridge Console Get started page, select EventBridge Rule and choose Create rule.

Under Define rule detail provide a Name for the rule.

Under Event bus, select default.

Under Rule type, select Rule with an event pattern. Then choose Next.

Under Build event pattern > Event source choose Other.

Under Event pattern, enter the following JSON, replacing <S3_BUCKET_NAME> with your own S3 Bucket name, then choose Next.

{

"source": ["aws.s3"],

"detail-type": ["Object Created"],

"detail": {

"bucket": {

"name": ["<S3_BUCKET_NAME>"]

}

}

}

Under Select target > Target types, choose AWS service. Select Lambda function and the Lambda Function you have created previously, then choose Next.

Optionally configure tags then choose Next.

Under Review and Create, choose Create rule.

Test the automated SFTP upload of files from S3 Bucket

Upload a file into your S3 bucket.

Below is an example AWS CLI command to upload the file "test1.txt" into the S3 Bucket "freddy-test-aws-transfer", directory "out/".

$ aws s3 cp test1.txt s3://freddy-test-aws-transfer/out/

upload: ./test1.txt to s3://freddy-test-aws-transfer/out/test1.txt

Upon uploading a file to the S3 Bucket, we should expect to see the file appear on the remote SFTP server after a short time. For this example, the file landing location on the remote SFTP server is:

/home/aws-transfer/landing/test1.txt

When the file was uploaded to the S3 bucket, an object create event should trigger an invocation of the Lambda function. Evidence of this can be seen in the Lambda Function logs in CloudWatch Logs (log group name "/aws/lambda/<lambda_function_name>").

Below is an example of the invoked Lambda Function logs.

The log message shows the SFTP transfer of the created S3 object /freddy-test-aws-transfer/out/test1.txt to the remote SFTP server path directory /home/aws-transfer/landing. Note that the SFTP server path directory was specified by the Lambda Function environment variable "REMOTE_DIRECTORY_PATH".

The log message also shows the SFTP Connector transfer ID.

We can check to see that Lambda Function was able to use the SFTP Connector to successfully upload the file to the remote SFTP server by checking the CloudWatch Logs (log group name "/aws/transfer/<connector_id>".

The below example shows the SFTP Connector logs.

The log message shows a transfer event status code of "COMPLETED" with a matching transfer ID, source and destination as the Lambda Function logs.

Conclusion

A recent addition to the AWS Transfer Family is the SFTP Connector. The SFTP Connector can be used to transfer files to and from a remote SFTP server and an S3 Bucket. The remote SFTP server needs to be publicly accessible over the internet to be reachable by the SFTP Connector.

The SFTP Connector uses SFTP user credentials that are stored in AWS Secrets Manager. This can be a username password or private key. Transfer events can be configured to log to CloudWatch Logs.

Using the SFTP Connector, it is possible to automate the upload of files placed in an S3 Bucket to a remote SFTP server. This can be achieved by configuring S3 Bucket notifications to trigger a Lambda Function. The Lambda Function can then use the SFTP Connector to upload the S3 object file.

The SFTP Connector is straightforward to set up to achieve serverless file transfers between S3 and a public SFTP server. However, some missing features would make this service better. It would be good to be able to assign a static IP address to the SFTP Connector. This would allow the set up of an IP allow list on the remote SFTP server for extra security. It would also be handy to have an API call to check the status of the SFTP Connect transfer job using the transfer ID. Currently, the only way to do this is by checking the SFTP Connect logs in CloudWatch Logs.